A paper by Yongwei Li, Yongzhi Jiang and Xinkai Wu was published inTransportation Research Part C: Emerging Technologies entitled “TrajPT: A trajectory data-based pre-trained transformer model for learning multi-vehicle interactions”. The authors propose a model designed to learn spatial–temporal interactions among vehicles, trained using the pNEUMA dataset.

Highlights

- Propose TrajPT, a trajectory data-based pre-trained transformer model leveraging LLMs paradigm.

- Design a joint spatial-temporal attention module to extract spatial and temporal interaction features.

- Present a graph-based interaction construction method to process unannotated trajectory data.

Abstract

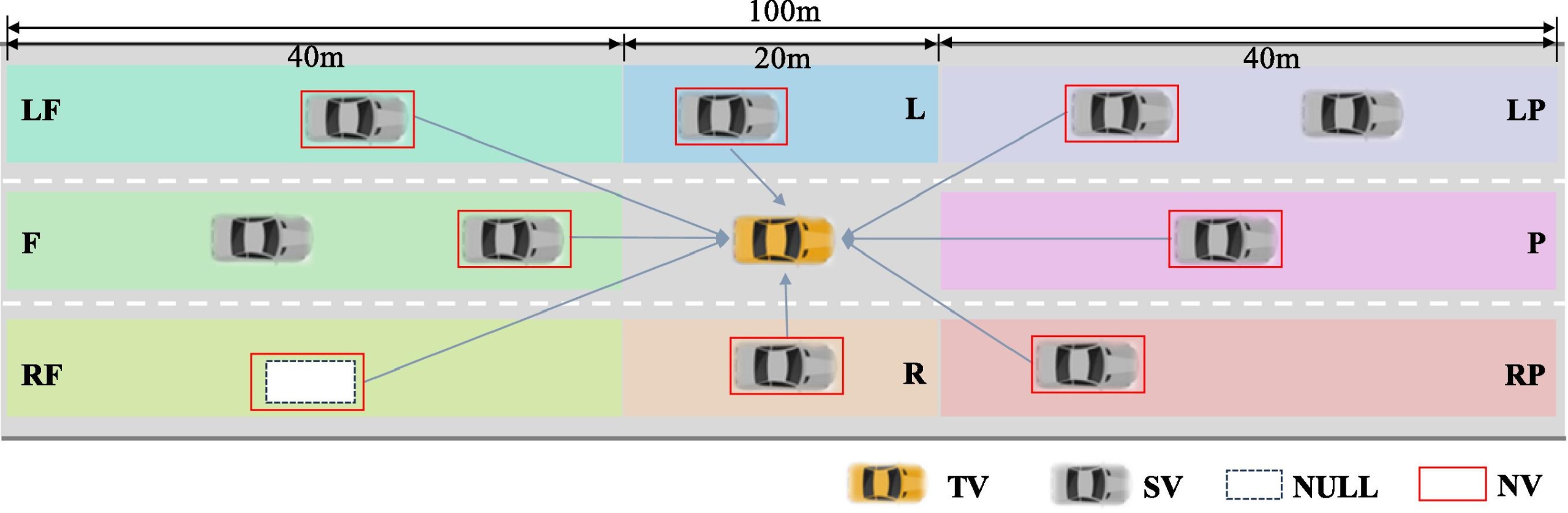

Modeling and learning interactions with surrounding vehicles are critical for the safety and efficiency of autonomous vehicles. In this paper, we propose TrajPT, a Trajectory data-based Pre-trained Transformer model designed to learn spatial–temporal interactions among vehicles from large-scale real-world trajectory data. Inspired by pre-trained large language models, TrajPT adopts an autoregressive learning framework and a pre-training paradigm, and can be fine-tuned for different autonomous driving downstream tasks. To capture complex spatial–temporal interactions among vehicles, we utilize a spatial–temporal scene graph to encode observed vehicle trajectories and introduce a novel graph-based joint spatial–temporal attention module, which extracts spatial interactions within single frames and temporal dependencies across frames. TrajPT is pre-trained on pNEUMA, the largest publicly available vehicle trajectory dataset. We validate the performance of TrajPT by fine-tuning it on two downstream tasks: lane-changing prediction and trajectory prediction. Extensive experimental results demonstrate that the proposed TrajPT outperforms the baseline model and exhibits significant generalization performance across multiple datasets.

You can read the paper here.