A paper by Zhiwei Yang, Zuduo Zheng, Jiwon Kim and Hesham Rakha was published inTransportation Research Part C: Emerging Technologies entitled “Eco-driving strategies using reinforcement learning for mixed traffic in the vicinity of signalized intersections”. The authors utilise the pNEUMA dataset instead of simulated trajectories toused to train and test the reinforcement learning methods.

Highlights

- This study proposes autonomous eco-driving strategies for mixed traffic with limited information available.

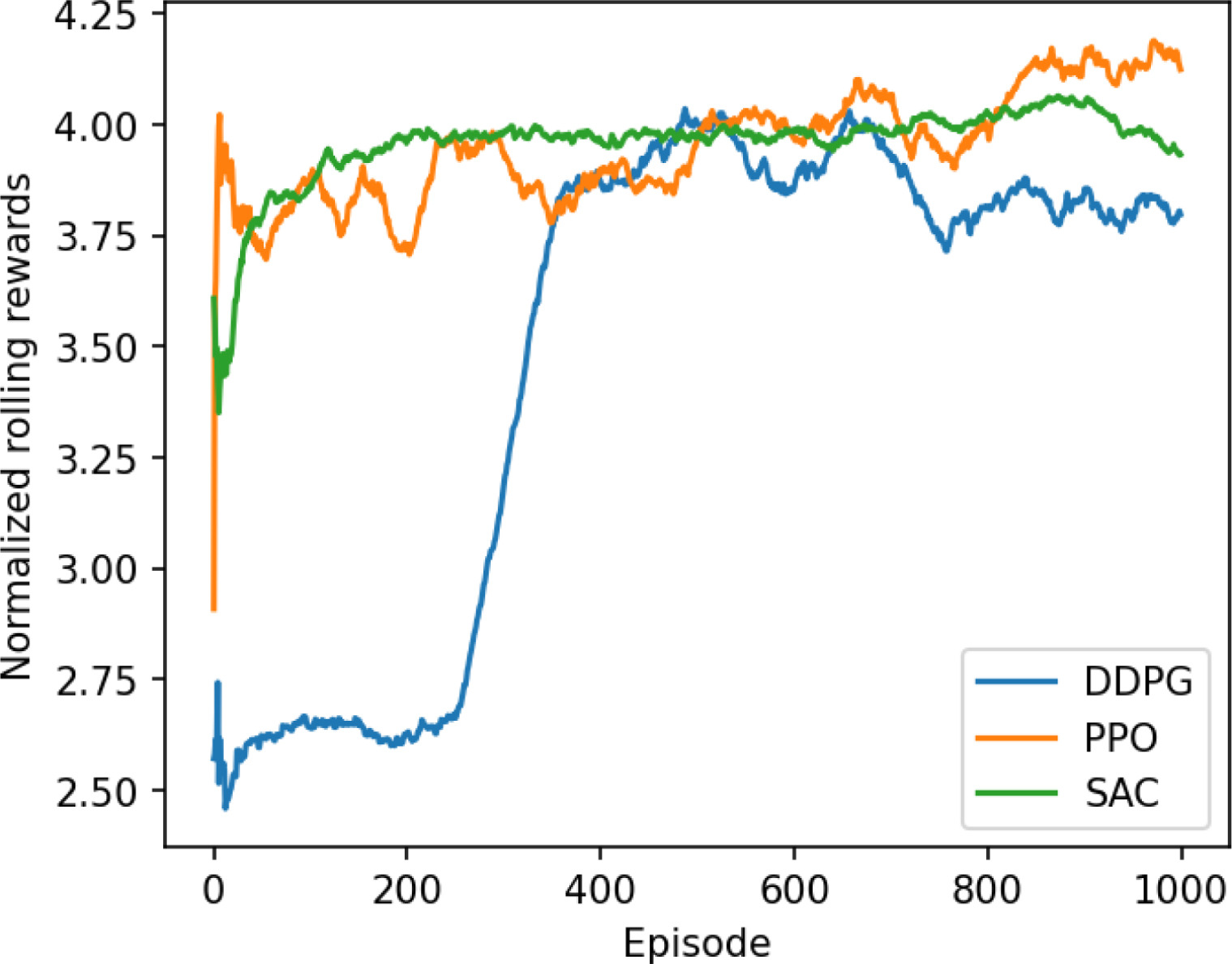

- Three popular Reinforcement Learning algorithms for continuous actions, i.e., DDPG, PPO and SAC are considered.

- A hybrid policy is proposed to take advantage of Reinforcement Learning and an analytical car-following mode.

- A trade-off among safety, efficiency, energy, and comfort is considered in the reward function.

- SAC with the hybrid policy has the best capability in the temporal and spatial generalization.

Abstract

This study proposes autonomous eco-driving strategies for a traffic environment with limited information available based on three popular Reinforcement Learning (RL) algorithms for continuous actions, i.e., Deep Deterministic Policy Gradient (DDPG), Proximal Policy Optimization (PPO) and Soft Actor–Critic (SAC) approaches, to address a serious challenge in the literature. The challenge is, despite the potential of connected and automated vehicles (CAVs) to diminish traffic interruptions caused by traffic signals on urban streets, for a long period of time, the accurate and immediate exchange of information via vehicle-to-everything (V2X) communication remains a problem because of the low penetration rate of CAVs and communication quality issues. The available information is assumed to only include signal phase and timing (SPaT) information and traffic states of the leading vehicle, respectively. To optimize the overall driving performance, a trade-off among safety, efficiency, energy, and ride comfort is considered in the reward function. Moreover, a hybrid policy is proposed to take advantage of RL and an analytical car-following model. The strategies enable CAVs to safely, efficiently and comfortably traverse signalized intersections while all the other vehicles are unconnected human-driven vehicles (HVs). Trajectories from the pNEUMA dataset are used to train and test the proposed models. The performance of the proposed models is compared to the naturalistic driving data, the Intelligent Driver Model (IDM) and an eco-driving method based on rules and optimization (Trigo). Testing results show that DDPG and SAC with the hybrid policy (HybridSAC) have the best overall performance, i.e., better than human drivers in all aspects; better safety, energy and comfort than the Trigo model; similar performance to the IDM but better energy efficiency. The temporal and spatial generalization capabilities of the RL-based methods are also tested, among which HybridSAC has the best performance as a whole.

You can read the paper here.